Intro

Hi guys! Today we are going to talk about uploading files to Amazon S3 Bucket from your Spring Boot application.

As you may notice almost each application, mobile or web, gives users an ability to upload their images, photos, avatars etc. So you, as a developer, should choose the best way how to save and where to store these files. There are different approaches for storing files:

- directly on the server where an application is deployed

- in the database as a binary file

- using some cloud storages

In my developer practice I always prefer the last approach as it seems to be the best one for me (but of course you might have your own opinion). As a cloud service I use S3 Bucket Service of Amazon Company. And here is why:

- it’s easy to programmaticaly upload any file using their API

- Amazon supports a lot of programming languages

- there is a web interface where you can see all of your uploaded files

- you can manually upload/delete any file using web interface

Account Configuration

To start using S3 Bucket you need to create an account onAmazon website. Registration procedure is easy and clear enough, but you will have to verify your phone number and enter your credit card info (don’t worry, your card will not be charged if only you buy some services).

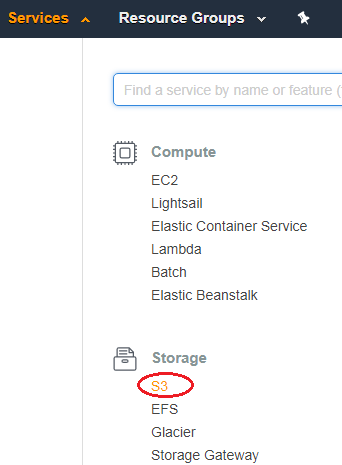

After account creation we need to create s3 bucket. Go to Services -> S3

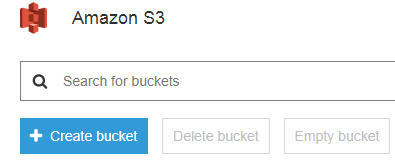

Then press ‘Create bucket’ button.

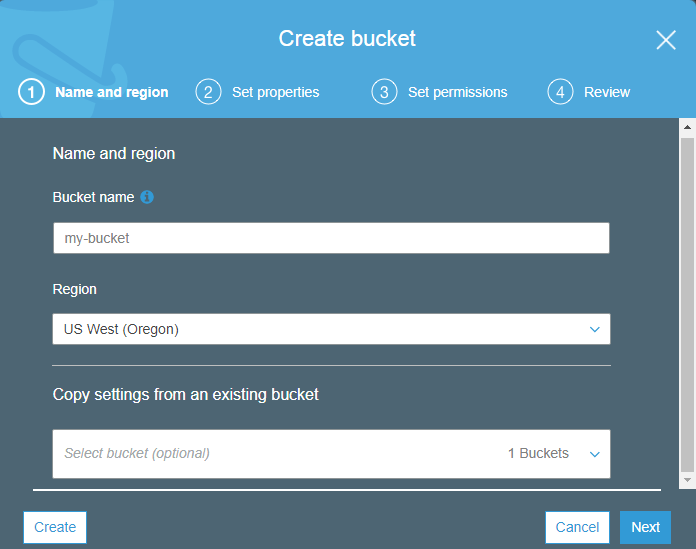

Enter your bucket name (should be unique) and choose region that is closest to you. Press ‘Create’ button.

NOTE: Amazon will give you 5GB of storage for free for the first year. After reaching this limit you will have to pay for using it.

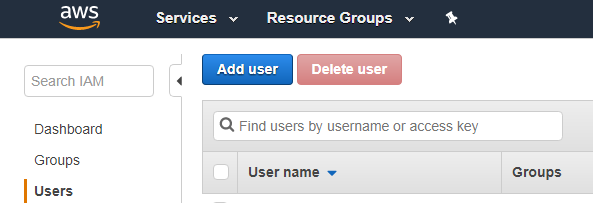

Now your bucket is created but we need to give permission for users to access this bucket. It is not secured to give the access keys of your root user to your developer team or someone else. We need to create new IAM user and give him permission to use only S3 Bucket.

AWS Identity and Access Management (IAM) is a web service that helps you securely control access to AWS resources.

Let’s create such user. Go to Services -> IAM. In the navigation pane, choose Users and then choose Add user.

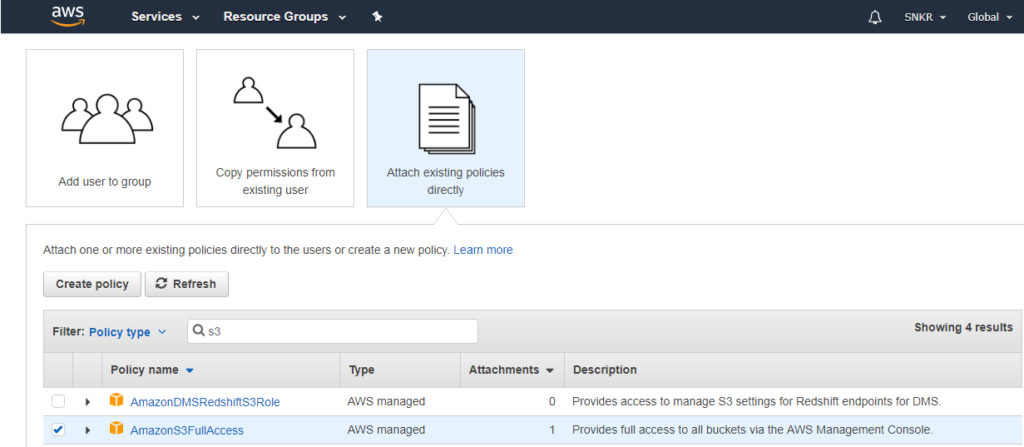

Enter user’s name and check Access type ‘Programatic access’. Press next button. We need to add permissions to this user. Press ‘Attach existing policy directly’, in the search field enter ‘s3’ and among found permissions choose AmazonS3FullAccess.

Then press next and ‘Create User’. If you did everything right then you should see Access key ID and Secret access key for your user. There is also ‘Download .csv’ button for downloading these keys, so please click on it in order not to loose keys.

Our S3 Bucket configuration is done so let’s proceed to Spring Boot application.

Spring Boot Part

Let’s create Spring Boot project and add amazon dependency

<dependency> <groupId>com.amazonaws</groupId> <artifactId>aws-java-sdk</artifactId> <version>1.11.133</version> </dependency>

Now let’s add s3 bucket properties to our application.yml file:

amazonProperties:

endpointUrl: https://s3.us-east-2.amazonaws.com

accessKey: XXXXXXXXXXXXXXXXX

secretKey: XXXXXXXXXXXXXXXXXXXXXXXXXX

bucketName: your-bucket-name

It’s time to create our RestController with two request mappings “/uploadFile” and “/deleteFile”.

@RestController

@RequestMapping("/storage/")

public class BucketController {

private AmazonClient amazonClient;

@Autowired

BucketController(AmazonClient amazonClient) {

this.amazonClient = amazonClient;

}

@PostMapping("/uploadFile")

public String uploadFile(@RequestPart(value = "file") MultipartFile file) {

return this.amazonClient.uploadFile(file);

}

@DeleteMapping("/deleteFile")

public String deleteFile(@RequestPart(value = "url") String fileUrl) {

return this.amazonClient.deleteFileFromS3Bucket(fileUrl);

}

}There is nothing special in the controller, except uploadFile() method recieves MultipartFile as a RequestPart.

This code is actually broken because we don’t have AmazonClient class yet, so let’s create this class with the following fields.

@Service

public class AmazonClient {

private AmazonS3 s3client;

@Value("${amazonProperties.endpointUrl}")

private String endpointUrl; @Value("${amazonProperties.bucketName}")

private String bucketName; @Value("${amazonProperties.accessKey}")

private String accessKey; @Value("${amazonProperties.secretKey}")

private String secretKey;@PostConstruct

private void initializeAmazon() {

AWSCredentials credentials = new BasicAWSCredentials(this.accessKey, this.secretKey);

this.s3client = AmazonS3ClientBuilder.standard().withCredentials(new AWSStaticCredentialsProvider(credentials))

.withRegion(Regions.US_EAST_2).build();

}

}AmazonS3 is a class from amazon dependency. All other fields are just a representation of variables from our application.yml file. The @Value annotation will bind application properties directly to class fields during application initialization.

We added method initializeAmazon() to set amazon credentials to amazon client. Annotation @PostConstruct is needed to run this method after constructor will be called, because class fields marked with @Value annotation is null in the constructor.

S3 bucket uploading method requires File as a parameter, but we have MultipartFile, so we need to add method which can make this convertion.

private File convertMultiPartToFile(MultipartFile file) throws IOException {

File convFile = new File(file.getOriginalFilename());

FileOutputStream fos = new FileOutputStream(convFile);

fos.write(file.getBytes());

fos.close();

return convFile;

}Also you can upload the same file many times, so we should generate unique name for each of them. Let’s use a timestamp and also replace all spaces in filename with underscores to avoid issues in future.

private String generateFileName(MultipartFile multiPart) {

return new Date().getTime() + "-" + multiPart.getOriginalFilename().replace(" ", "_");

}Now let’s add method which uploads file to S3 bucket.

private void uploadFileTos3bucket(String fileName, File file) {

s3client.putObject(new PutObjectRequest(bucketName, fileName, file)

.withCannedAcl(CannedAccessControlList.PublicRead));

}In this method we are adding PublicRead permissions to this file. It means that anyone who have the file url can access this file. It’s a good practice for images only, because you probably will display these images on your website or mobile application, so you want to be sure that each user can see them.

Finally, we will combine all these methods into one general that is called from our controller. This method will save a file to S3 bucket and return fileUrl which you can store to database. For example you can attach this url to user’s model if it’s a profile image etc.

public String uploadFile(MultipartFile multipartFile) {

String fileUrl = "";

try {

File file = convertMultiPartToFile(multipartFile);

String fileName = generateFileName(multipartFile);

fileUrl = endpointUrl + "/" + bucketName + "/" + fileName;

uploadFileTos3bucket(fileName, file);

file.delete();

} catch (Exception e) {

e.printStackTrace();

}

return fileUrl;

}The only thing left to add is deleteFile() method.

public String deleteFileFromS3Bucket(String fileUrl) {

String fileName = fileUrl.substring(fileUrl.lastIndexOf("/") + 1);

s3client.deleteObject(new DeleteObjectRequest(bucketName + "/", fileName));

return "Successfully deleted";

}S3 bucket cannot delete file by url. It requires a bucket name and a file name, that’s why we retrieved file name from url.

Testing time

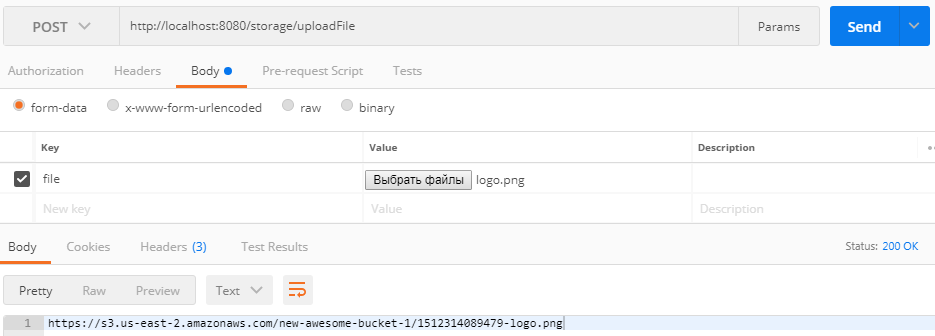

Let’s test our application by making requests using Postman. We need to choose POST method, in the Body we should select ‘form-data’. As a key we should enter ‘file’ and choose value type ‘File’. Then choose any file from your PC as a value. The endpoint url is: https://localhost:8080/storage/uploadFile.

If you did everything correct then you should get file url in the response body.

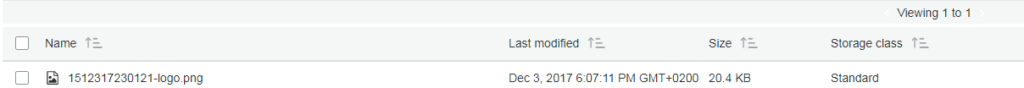

And if you open your S3 bucket on Amazon then you should see one uploaded image there.

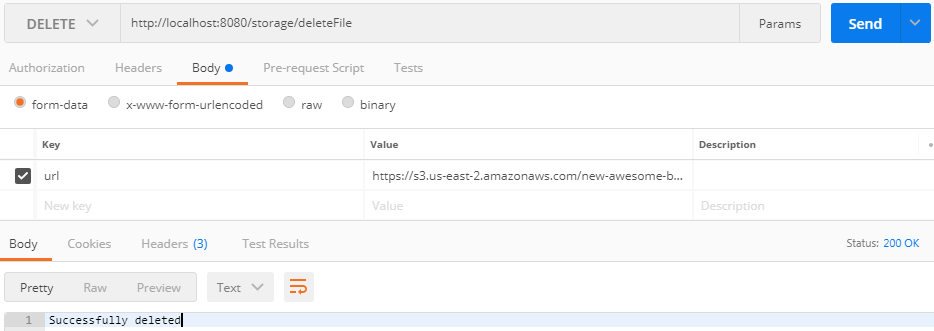

Now let’s test our delete method. Choose DELETE method with endpoint url: https://localhost:8080/storage/deleteFile. Body type is the same: ‘form-data’, key: ‘url’, and into value field enter the fileUrl created by S3 bucket.

After sending the request you should get the message ‘Successfully deleted’.

Conclusion

That’s basically it. Now you can easily use S3 bucket in your own projects. Hope this was helpful for you. If you have any questions please feel free to leave a comment. Thank you for reading, also do not forget to press Clap button if you like this post.

You can check out full example of this application on my GitHub account. As well you can check it on Oril Software GitHub.