Prompt engineering is a cutting-edge approach to crafting effective and efficient conversational AI systems. In an age where human-computer interactions are becoming increasingly prevalent, mastering the art of prompt engineering is vital. This article delves into the principles, strategies, and best practices behind prompt engineering.

What is prompt engineering?

Prompt engineering is crafting carefully structured prompts or instructions to guide the behavior of AI models, particularly in natural language processing (NLP) tasks. It’s crucial to fine-tune AI models to achieve desired outcomes in various applications, including chatbots, language translation, text generation, and question-answering systems.

In prompt engineering, the goal is to provide the AI model with input that elicits the desired response. This often involves choosing words, phrases, or formats that steer the AI towards generating accurate and contextually appropriate responses. Effective prompt engineering can significantly improve AI systems’ performance, accuracy, and reliability.

Prompt engineering is an iterative process that requires experimentation and fine-tuning to achieve the desired results. It plays a vital role in harnessing the capabilities of AI models to create meaningful and valuable interactions between machines and humans.

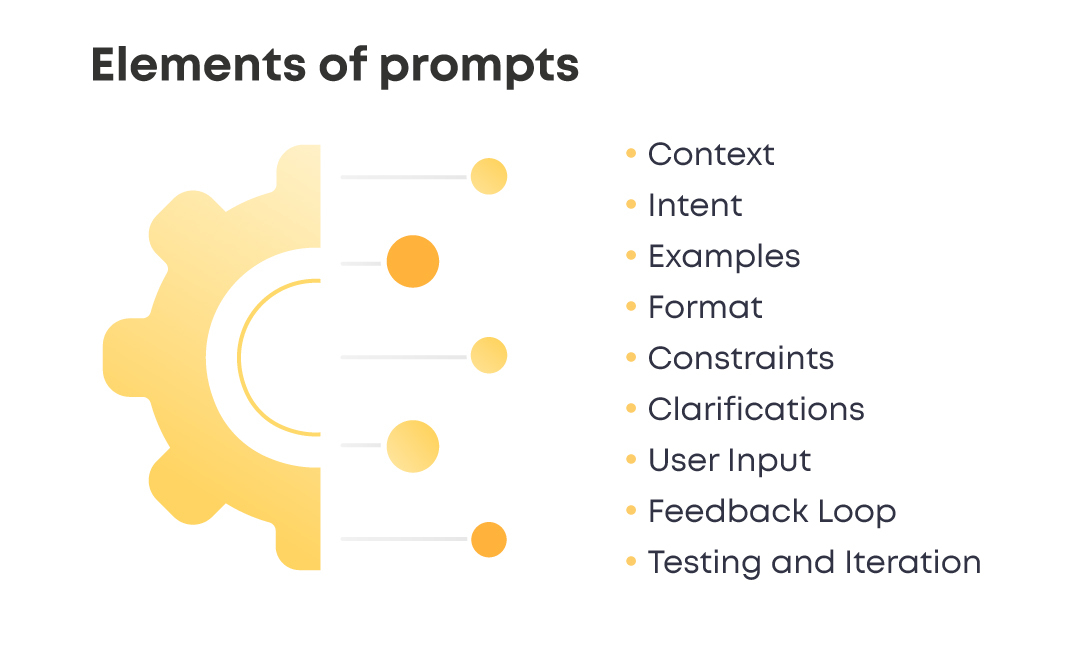

Elements of prompts

Prompts, especially in instructing or interacting with AI models, typically consist of several key elements to communicate the desired input or request effectively. These elements include:

- Context: Providing context is essential for ensuring the AI understands the prompt’s purpose. Context can include information about the topic, the user’s previous statements or questions, and relevant background information.

- Intent: Clearly state what you want the AI to do or answer. Be specific about the task or the type of response you are seeking. For example, if you’re using a language model, you might specify that you want it to summarize a paragraph or answer a factual question.

- Examples: Including examples can help clarify your intent and provide the AI with reference points for generating responses. These examples can be in the form of sample sentences or queries related to the task.

- Format: Specify the format or structure to which you want the response to adhere. This might involve specifying the type of answer (e.g., in a sentence or a paragraph), the tone (formal or informal), or any other relevant formatting details.

- Constraints: To ensure the response aligns with your requirements, you can set constraints. These constraints may limit the response length, restrict certain types of content, or emphasize specific aspects of the answer.

- Clarifications: In complex or ambiguous tasks, it’s helpful to include instructions for the AI to seek clarifications if uncertain about the prompt’s meaning. This can encourage more accurate responses.

- User Input: In interactive systems, prompts often include the user’s input or query. Including the user’s question or statement helps provide the AI context and guides its response.

- Feedback Loop: For machine learning-based systems, including a feedback loop allows you to evaluate and improve the AI’s responses over time. You can provide feedback on the quality of the generated responses and use this information for model refinement.

- Testing and Iteration: The process of prompt engineering often involves testing different variations of prompts and iterating to find the most effective one. Experimentation is crucial in fine-tuning the AI’s performance.

Remember that the effectiveness of prompts may vary depending on the specific AI model and the task at hand. Crafting clear and informative prompts is a skill that improves with practice, and it’s a critical aspect of achieving desired outcomes in AI interactions.

Common use cases

Prompts are a versatile tool used in various natural language processing (NLP) and machine learning tasks. Some of the most common use cases for using prompts include:

- Text Generation: Generating human-like text, whether it’s for creative writing, content generation, or chatbots. Prompts can guide the AI in generating text with specific themes, tones, or styles.

- Translation: Translating text from one language to another. Prompts can specify the source language, the target language, and the text to be translated.

- Summarization: Summarizing lengthy documents, articles, or paragraphs into shorter, more concise versions. Prompts can instruct the AI to provide a summary of a given text.

- Question Answering: Answering questions based on a given context or passage. Prompts typically include the question and the relevant context.

- Language Understanding: Training models for language understanding tasks include intent recognition in chatbots or virtual assistants. Prompts can include user queries or requests for specific actions.

- Sentiment Analysis: Analyzing a piece of text’s sentiment (positive, negative, neutral). Prompts can specify the text to be analyzed and request sentiment scores.

- Data Extraction: Extracting structured information from unstructured text. Prompts can guide the AI in extracting specific data fields like names, dates, or locations from documents.

- Text Classification: Classifying text into predefined categories or labels. Prompts can include the text to be classified and request the category or label.

- Recommendation Systems: Building recommendation engines for products, content, or services. Prompts can include user preferences and context to generate personalized recommendations.

- Conversational AI: Developing chatbots, virtual assistants, or customer support bots. Prompts help simulate user interactions by providing user queries and system responses.

- Grammar Correction: Correcting grammar, spelling, or punctuation errors in text. Prompts can include the input text and request the corrected version.

- Image Captioning: Generating descriptive captions for images. Prompts may include details about the image and instruct the AI to provide a caption.

- Code Generation: Generating code snippets or scripts based on high-level instructions. Prompts can provide the problem statement and request code solutions.

These are just a few examples of how prompts are used across various NLP and machine-learning tasks. The effectiveness of prompts often depends on the specific use case and the capabilities of the AI model being employed.

Prompting techniques to control the output

Controlling the output of AI models using prompting techniques is essential to ensure that generated responses align with the desired objectives. Here are some standard prompting techniques used to control AI model output:

Length control. Limits the length of the response by specifying the maximum number of tokens (words or characters) allowed in the output. This will enable responses to be brief.

Prompt example: “Precisely describe what prompt engineering is. The output should contain 20 words max.”

Response: “Prompt engineering tailors inputs to guide AI models, ensuring desired outcomes in natural language processing tasks.”

Audience control. This technique involves instructing AI models to tailor responses for specific audiences or user groups.

Prompt example: “Explain what prompt engineering is to a 5-year-old kid.”

Response: “Okay, imagine you have a super smart robot friend who can do all sorts of things. To make the robot do what you want, you have to give it instructions, like telling it what to do step by step. Well, “prompt engineering” is like figuring out the best way to talk to your robot friend so it understands and does exactly what you want it to do. It’s like choosing the right words and sentences to make your robot buddy do cool stuff!”

Tone control. It is a method of instructing AI models to generate responses with a specific emotional or conversational tone. Depending on the context and communication goal, it guides the AI to use language and style that aligns with a desired tone, such as formal, friendly, empathetic, or professional.

Prompt example: “Write a persuasive email to a dissatisfied customer regarding a product issue. Maintain a calm and empathetic tone.”

Response:“Dear [Customer’s Name],

I understand your concerns about the product issue you’ve encountered. We sincerely apologize for any inconvenience caused..”

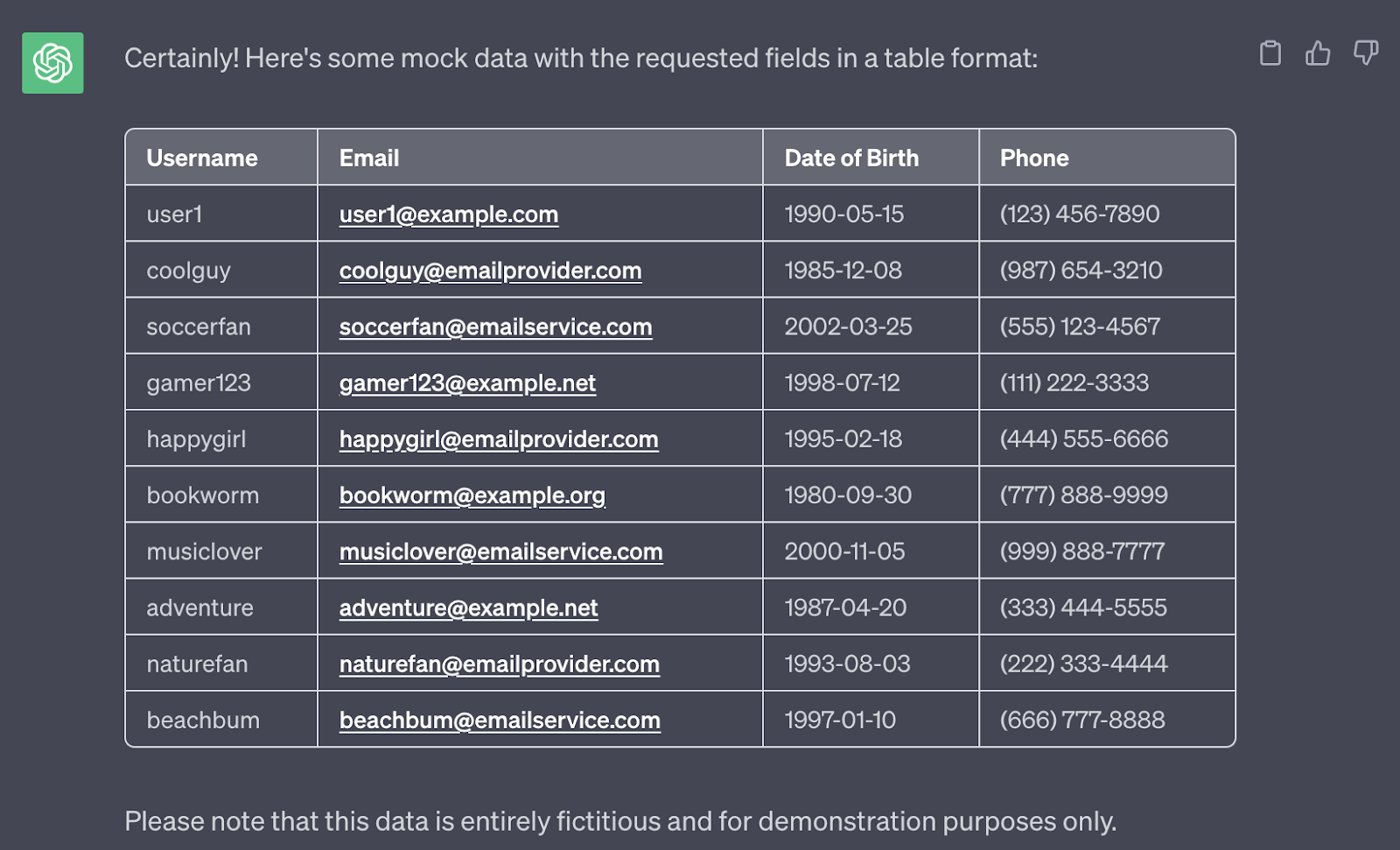

Style control. It’s instructing AI models to generate a response in a particular style. It could be marking down text into headings and subheadings, bullet points, or even in the form of a table.

Prompt example: “Generate mock data with the following fields: Username, Email, Date of Birth and Phone. Show the result in the table. The table should contain only ten rows.”

Response:

Seed Text: Provides a starting point or seed text to initiate text generation. This is often used in creative writing to kickstart the AI’s creativity with an initial sentence or paragraph.

Prompt example: “Write a paragraph on the following topic: ‘Will AI conquer the world?’. Use this sentence as a starting point: ‘Many of you have seen a lot of movies where AI is out of control’”.

Response: “Many of you have seen a lot of movies where AI is out of control, wreaking chaos and posing existential threats to humanity. While these cinematic depictions may fuel our imagination, the reality of AI conquering the world is far more complex…”

Break into subtasks. The prompt technique for splitting a task into subtasks involves instructing AI to break down a complex task into smaller, manageable steps.

Prompt example: “Provide a summary of the book ‘The Shining’ by Stephen King. Please follow these steps:

1. When the book was written.

2. Genre of the book?

3. Names of main characters and their relations with each other.

4. Short plot of the book.”

Response:

Indeed, here’s a summary of Stephen King’s novel “The Shining”:

1. When was the book written: “The Shining” was written by Stephen King and published in 1977.

2.Genre: It is primarily a horror novel with psychological thriller and supernatural fiction elements.

3.Main characters and their relations:

- Jack Torrance: He is the protagonist of the story, a struggling writer and recovering alcoholic who becomes the winter caretaker of the Overlook Hotel.

- Wendy Torrance: Jack’s wife, who is devoted to her family and becomes increasingly concerned about her husband’s behavior.

- Danny Torrance: Jack and Wendy’s young son, who possesses psychic abilities known as “the shining.” He bonds powerfully with the Overlook Hotel’s cook, Dick Hallorann.

- Dick Hallorann: The hotel’s cook, who also possesses “the shining” and becomes a mentor and protector to Danny.

4. Plot: The Torrance family moves to the isolated and haunted Overlook Hotel during the off-season, where Jack has taken a job as the winter caretaker. As the hotel becomes cut off from the outside world due to heavy snowfall, Jack’s mental stability deteriorates, influenced by the evil forces within the hotel. Meanwhile, Danny’s psychic abilities reveal the hotel’s dark history and propensity to awaken supernatural horrors. The novel explores addiction, family, isolation, and the battle between good and evil as the Torrance family confronts the terrifying and vicious entities within the Overlook Hotel.

Chain of thoughts. It’s a technique that involves instructing AI to generate responses that build upon a given concept or topic in a coherent and logical sequence. It’s used to encourage the AI to provide a structured and interconnected flow of ideas.

Prompt example: “Create a marketing pitch for a new fitness app. Start with the app’s key features.”

Response: “Introducing our revolutionary fitness app! It offers personalized workout plans, real-time progress tracking, and access to certified trainers. With our app, you can achieve your fitness goals faster than ever.”

Avoid hallucinations. It is a guideline used when instructing AI models to prevent them from generating responses that contain false, misleading, or imaginary information. When working with AI models, ensuring that the generated content remains accurate, factual, and reliable is essential, especially in situations where misinformation could be harmful or misleading.

Prompt example: “Please generate a code snippet for a responsive navigation menu using HTML and CSS. Ensure that the code adheres to best practices, avoids speculative or erroneous techniques, and provides a functional and visually appealing navigation menu.”

System and User Roles: The “User Role” prompt technique involves instructing AI to respond as if it were a specific user role, such as a customer, an administrator, or a teacher, to simulate context-appropriate interactions. It helps tailor responses to the expectations of that role.

Prompt example: “Respond as a customer support agent to a user inquiry about a product refund.”

Response: “Thank you for contacting us regarding your product refund. We apologize for any inconvenience you’ve experienced. Please provide your order number and a brief description of the issue. Our team will review your request and work to process your refund as quickly as possible.”

Clarifications. This technique involves instructing AI to seek clarification when encountering ambiguity or uncertainty in a user’s query or instruction. It encourages the AI to ask follow-up questions to understand the user’s intent better.

Prompt example: “You’re a virtual assistant. The user asks, ‘What’s the weather like today?’ If the user’s location is not specified, use a clarification prompt to ask for their current location.”

Response:

User: “What’s the weather like today?”

Virtual Assistant: “Certainly, I can help with that. Could you please provide me with your current location so I can check the weather accurately?”

To Sum Up

In conclusion, prompt engineering is crucial in guiding AI models to provide the answers we need by effectively channeling their output and ensuring precision, relevance, and coherence. It helps ensure accuracy in AI responses, making our interactions with technology smoother and more helpful.